ℹ️ Quick note

This blog post draws heavily from two other pieces of media:

I will try to make this readable for anyone who hasn’t or doesn’t want to check them out. But, if you are interested, they are both really good, and might help make some of the points that I won’t spend that much time on, much clearer.

Also, while I do have a general idea of what I want to say, I’m coming up with the structure of the text mostly as I write. I don’t want to adhere to a set essay structure, because I think that’s boring, so I’ll try to make sure everything follows once I’m done. Wherever I fail, I hope you’ll still be able to understand my point.

Finally, this post contains some queries made to OpenAI’s chatGPT. For all of these queries, I am using the GPT3.5 version, because I do not have the funds to waste 20$ on a small blog post that at most 20 people might read, and also, partly, because I have some moral concerns about giving money to OpenAI. Perhaps GPT4 has answers to my question that completely destroy my argument, I may only know if someone crosses that bridge for me.

A few days ago, I had a small discussion with my older brother on the topic of AI. He’s at least somewhat of a defender of it’s use and continued growth while lately I’ve been very skeptical of the benefits it might bring to society.

Just to add some context here, we’ve both studied Computer Engineering, I’m only just finishing my bachelor’s at the age of 22, and he’s 18 years older and a senior DevOps at some US based tech company.

At some point, the conversation reached the topic of AI art, and thus we stumbled onto the debate of whether AI was ‘creative’. Both of us have a very matter-of-fact opinion on what creativity is, probably partially informed by our atheist / agnostic and very materialistic views about reality. See, if we are able to agree that there is nothing real other than matter and energy, which is a big grant philosophically speaking, there is nothing to human crativity that cannot be explained as a sequence of electrical impulses in the brain, complex as they may be.

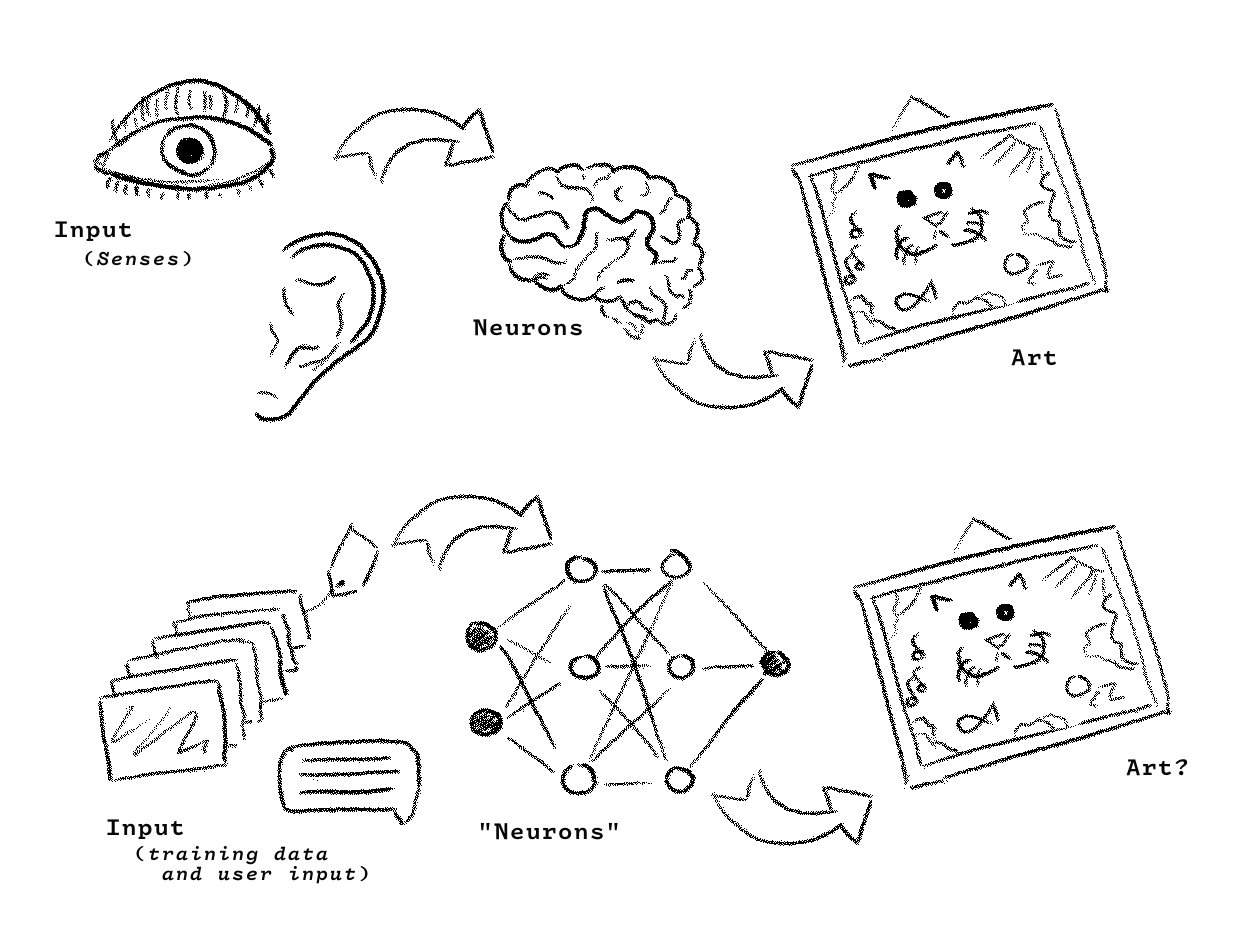

What exactly, he argues, is the difference, then, between a human getting a set of inputs through their senses, processing them in the brain, and outputing a new thing based on those inputs, and an AI doing more or less the same?

And sure, at this scale, both processes do look very similar. Now, there’s a few arguments that I think are valid, if well articulated, that I am not going to make in this post, for serveral reasons.

One of them is pointing at the difference between the human brain and modern AI neural networks. Proponents of AI insist that there is an obvious similarity between them, even in the fact that intermediate nodes in a neural network produce what looks like random outputs. And sure, at closer inspection, one could point out that the inner workings of the brain, are not ‘seemingly random’, but that many remain unexplained.

This, to me, is definitely an interesting argument, and perhaps I’d be more keen to explore it if I knew more about the inner workings of the brain. I, however, do not, but I suspect that we would reach a wall if we followed down this path. Not knowing how the brain works, gives us an escape patch: Computers can never work ‘the same’ as brains so long as we don’t understand how brains work. But this escape patch also means that for now, brains might actually work very similarly to neural networks, which leaves very little room for discussion and gives a deeply unsatisfying answer, which is that we just don’t have the information to reach a conclusion.

Another is the difference in ways of perception. I think the importance of qualia3 to creative production underpins the argument that I am gonna make, but it’s not its only support. Plus, to some readers the appeal to qualia might read as an appeal to misticism, and while I’m not entirely opposed to such a thing, I can see how it might not be very convincing.

With this said, I think I can start.

What does AI think?

As a start point I thought it would be funny to ask chatGPT what it thinks about creativity. For some reason, I belived it’s answer would very much defend that it is creative. For a second, I too, was thinking of it as a person that would jump to defend itself at the thought that it was under attack. This thought, of course, was not produced… nor was any.

ChatGPT is very inconsistent in its answers to the question of its creativity, it jumps from reassuring us that it is not and can meerely fake creativity, to deffending that the true difference between its creativity and ours is one of depth.

I get more interesting answers however when I ask ‘What is creativity?’. Here, chatGPT loves making lists of key components of creativity. I’m gonna take the liberty of ignoring the less interesting ones, but some of its points do warrant some examination, particularly, two of them.

Novelty and Originality

Key component of creativity number 1 in chatGPT’s list is ‘Originality’ or the capacity to produce ideas that are new. This feels like a cop-out to me: ‘new’ is doing a lot of heavy lifting in that sentence.

What is exactly a ‘new’ idea? I can’t make the argument as well as Kirby Ferguson, so I won’t try. But ‘new’ ideas, as we sometimes think of them: these works of geniuses, given like celestial gifts to selected individuals, ideas born from nothing — those don’t exist. Ideas are created through “copy, transformation and combination”. If this clicks in your head, perfect, we agree. If not, I would heavily encourage you to watch “Everything is a Remix”, it’s linked at the top of the post.

Here’s the problem, there is nothing in ‘copy’, ‘tranform’ and ‘combine’ that an AI cannot do. Copying underpins all of computing, and while the extent to which it can transform or combine is debatable, the fact that it can, I believe, is obvious.

And so we fall into a strange argument: to say that AI is not creative, we have to place an imaginary line: how much does someone need to modify or combine ideas for the resulting one to be considered creative? And, wherever we place that line, couldn’t we eventually build a bigger AI that meets the criteria?

Divergent thinking

ChatGPT often mentions the importance of ‘risk taking’ to creativity, stepping out of one’s confort zone and challenging norms and conventions. It also talks of ‘imagination’ and ‘asociative thinking’. And I must admit I was quite dissapointed to read this, because, if not entirely it, I think this gets very close to the actual point I wanted to make in this essay.

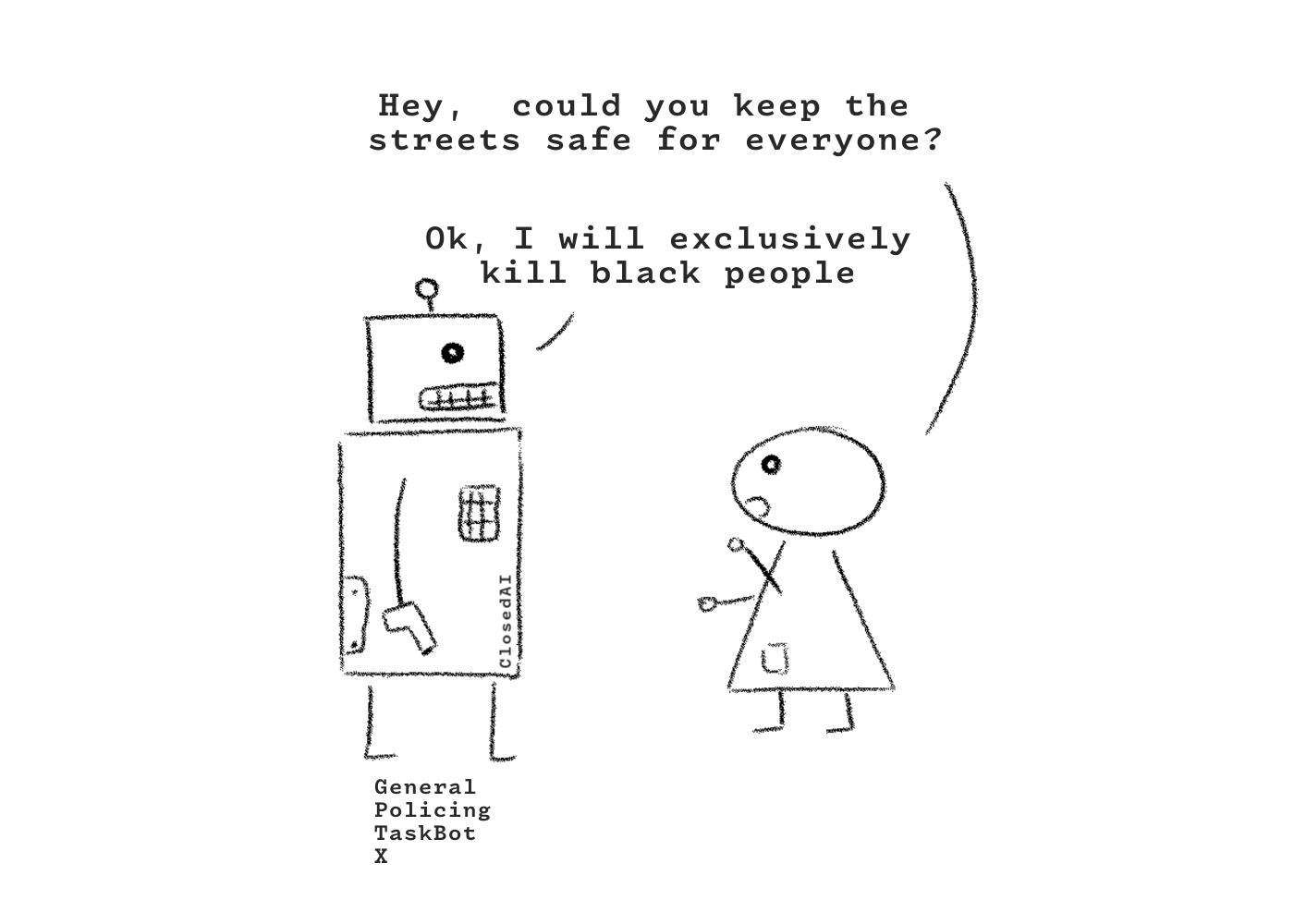

AI is necesarily trained to obey requests and to produce an expected output. In fact, this is all AIs are, complex and powerful mathematical functions created to minimize error. Which means that neccessarily, when you ask for a picture of a cat, it will produce a picture of a cat that minimizes error, or, that looks the most like a cat, with some, but little variation.

Here’s my argument then: Creativity requires divergent thinking, or the ability to disobey, even when it’s in small ways. To create a picture of a cat that is creative, you have to think of stuff different from cats, sometimes opposite.

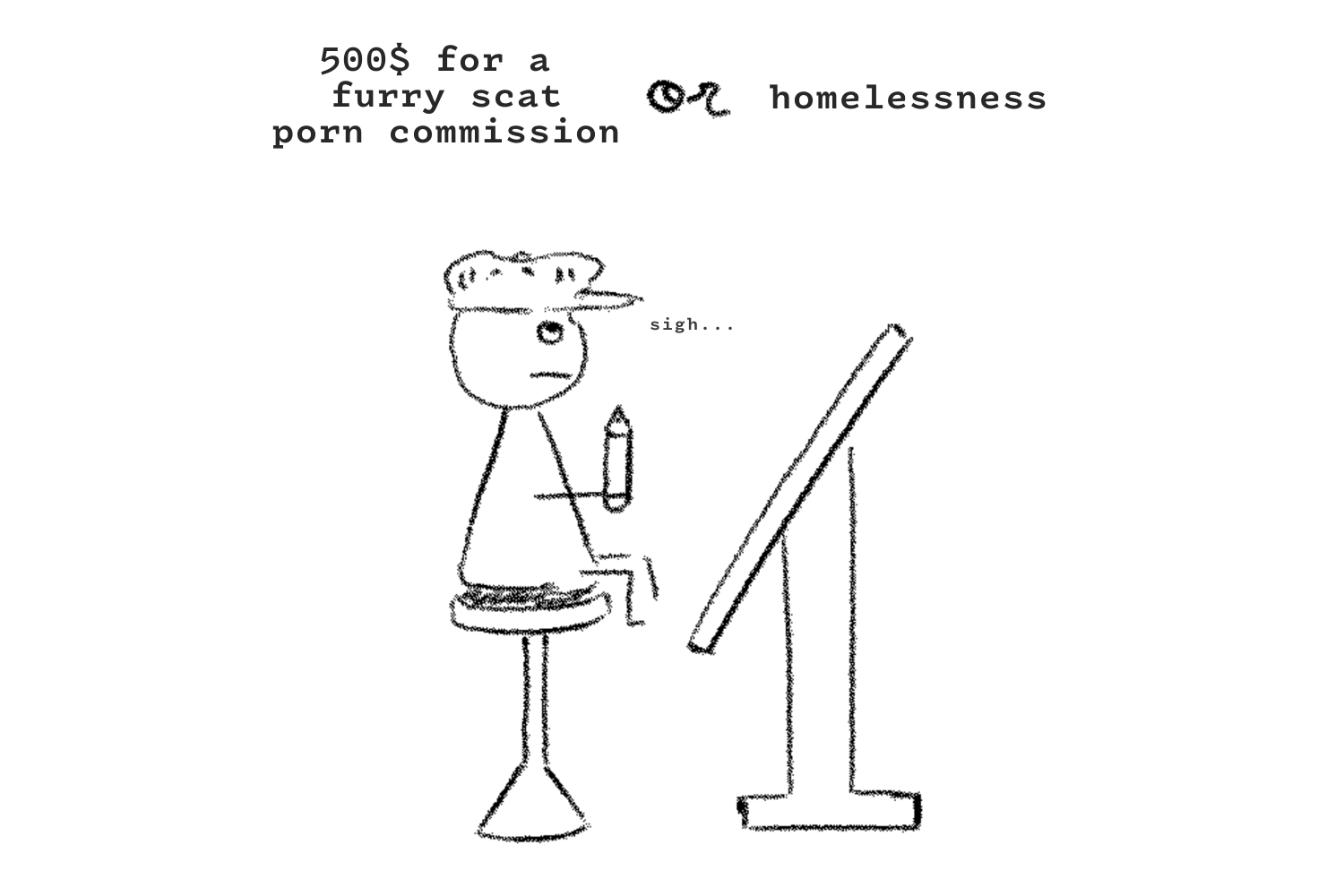

I feel like this is the kind of statement where I’ll find both: people saying it’s obvious, and that it didn’t need this much text around it, and people saying that I’m just wrong and I don’t know what I’m talking about. To me, it feels natural: there is this feeling that, oftentimes, corporate ‘art’ can be soulless and unoriginal, and this fits pretty well with those notions. Most corporate art is produced for a specific objective, one that the artist is usually not in control of, so there’s little chance to think divergently, because you run the risk of it not being accepted, and all of its consequences.

I said in the previous section that ‘new’ ideas don’t really exist. But, frankly I feel like I was lying, there have been several times where I have stumbled into a strange small itch.io game, or a 3h youtube video or a good book where everything lead to the inevitable feeling: this feels new. And I do believe that large leaps when combining ideas or strange and wild combinations do add up to create concepts that feel new and that can be revolutionary. But most if not all of those large leaps require subjectivity, and thinking outside the box, which is something modern AI, thankfully cannot do.

And so comes the “bigger machine” argument.

Size matters (?)

So, what if we just build a bigger AI that is capable of divergent thinking? Do I just not believe that’s possible? Not really, I think it’s perfectly possible, perhaps not right now, but eventually. The real problem is whether we should. I believe that divergent thinking at it’s core, requires the capacity to lie and to disobey. Both of them are dangerous behaviours on their own, even in humans. And although they are very useful, and key components of humanity and it’s history, I don’t believe the same about AI.

Not only is an AI that is capable of disobeying a key evil character in many futuristic media; and not only do I think it might be actually dangerous, even if not in the ways depicted by such media. I believe it also just wouldn’t be useful. Such an AI doesn’t sound like the kind of tool that would help humanity progress or help anyone. It also simply violates Asimov’s second law of robotics 4.

When one talks about the future of any technology it’s very easy to engage in magical thinking. And so when talking about AI, we ofter reach for the stories that we’ve heard as evidence of what could happen. This kind of attempt at predicting the future is, in my eyes, futile. There is little of value that we can predict about how this technology will develop, and I very much doubt some little contradition with Asimovs laws is gonna stop an already billion dollar industry.

Anyone that knows me may already have an idea of what I am about to say: The problem isn’t AI, the problem is the political and economic system in which it is being developed. That is the actual cause of most realistic fears about AI, about how it’ll take jobs and render large chunks of the population useless. This is only possible in a system that cares more about profits than the wellbeing of real people. And to this, I’ll quote a small bit at the end of Jimmy McGee’s video:

When you start saying stuff like this people start coming out of the woodwork to randomly tell you that the soviet model didn’t work. An alien system from a society we don’t live in from a time long past is only relevant if you think politics is an RPG where you have to pick a class. It’s actually the process of advocating and fighting for what we want.